Tag: Autonomous

Meet the ALL-NEW NVIDIA AGX Orin Developer Kit for Edge AI applications

Last month, NVIDIA announced the availability of the Jetson AGX Orin Developer Kit to deliver 275 trillion operations per second for energy-efficient advanced robotics and machine learning applications. There has been a lot of movement around the new NVIDIA developer kit that offers 8...

Continue Reading

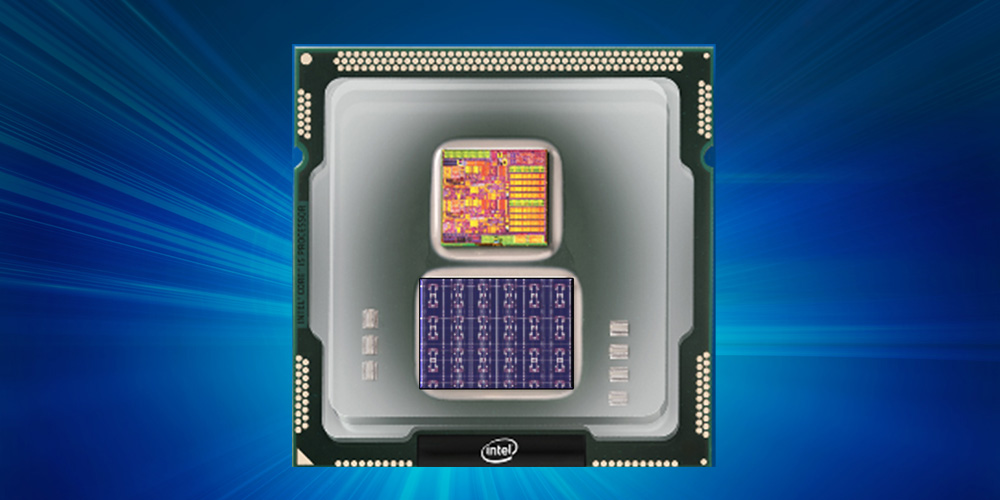

Intel Introduces Loihi – A Self Learning Processor That Mimics Brain Functions

Intel has developed a first-of-its-kind self-learning neuromorphic chip – codenamed Loihi. It mimics the animal brain functions by learning to operate based on various modes of feedback from the environment. Unlike convolutional neural network (CNN) and other deep learning processors,...

Continue Reading

Role Of Vision Processing With Artificial Neural Networks In Autonomous Driving

In next 10 years, the automotive industry will bring more change than we have seen in the last 50, due to technological advancement. One of the largest changes will be the move to autonomous vehicles, usually known as the self-driving car. Scientists from many universities are striving to...

Continue Reading