Tag: Intel

QNAP Thunderbolt-4 All-Flash NASbook is Designed for Video Production with Hot-Swappable M.2 SSDs

QNAP TBS-h574TX Compact NASbook with 13th Gen Intel Core, High-Speed I/Os, and Storage Interface are built for video production post-production and small studios. The device has two versions: an i5-1340PE CPU featuring 12 cores and 16 threads, a clock range of 1.80 GHz to 4.50 GHz, and...

Continue Reading

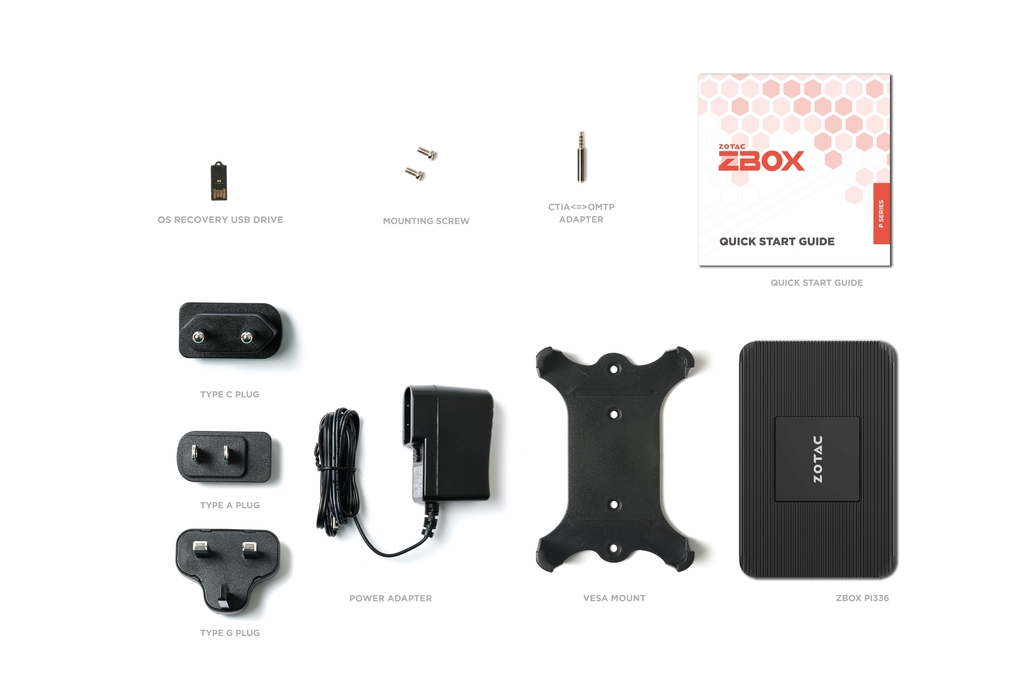

ZOTAC Announces a Powerful Portable PC with Extremely Small Form-Factor – ZBOX PI336 pico

The Global Manufacturer of Innovation, ZOTAC Technology recently announced a range of products including the ZBOX PI336 pico, the smallest full-fledged PC, a wearable PC, the new thinnest professional workstation, and a series of powerful graphic cards. The new ZBOX PI336 launched by...

Continue Reading

Intel’s Habana Labs designs next-gen AI processors – Gaudi2 and Greco

Intel’s data center focused, Habana Labs, has announced its second-generation deep learning processors for training and inference deployment in the data center– Habana Gaudi 2 and Habana Greco. The new design is meant to reduce entry barriers for companies of all sizes leveraging...

Continue Reading

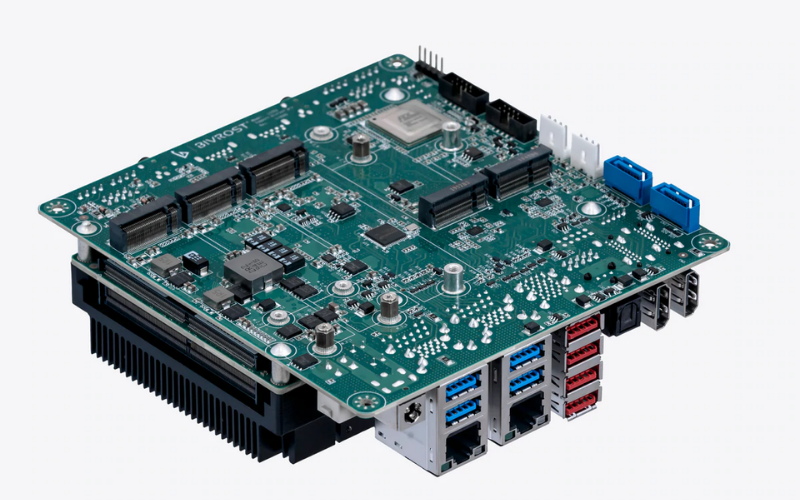

BIVROST Lite5 mini-STX platform provides up to 96GB RAM for edge computing and machine vision applications

The BIVROST Lite5 STX platform is a 5-inch mini-STX motherboard specifically designed to accommodate the increasing demands of AI/ML, edge computing, and industry 4.0 applications. Lite5 has been built to increase I/O capacity, utilize the capabilities of the unified memory...

Continue Reading

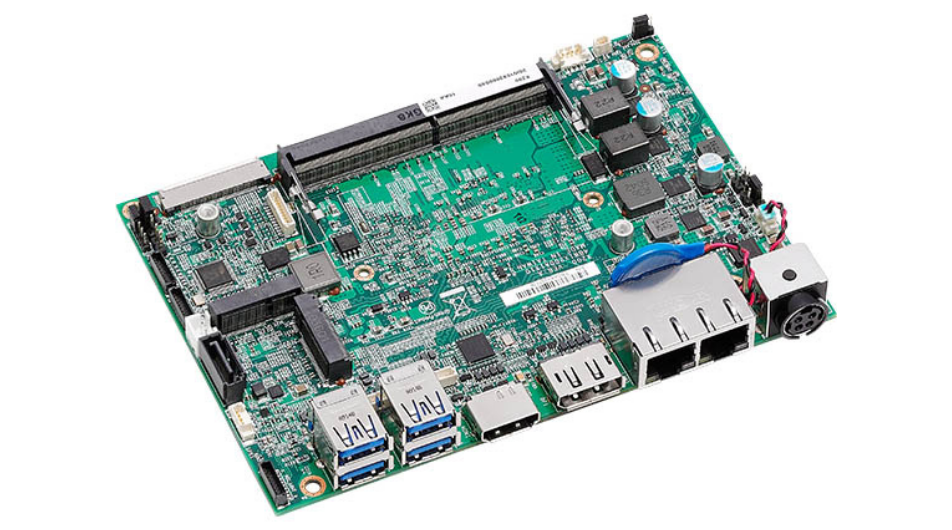

NEXCOM Unveils X200 Embedded Computer Board For Healthcare Applications

Taiwan-based embedded electronic device manufacturer, NEXCOM has unveiled a 3.5-inch embedded computer board, X200, built around the 11th Generation Intel Core Processor. Decently sized high-performance computer is designed to bring in digital transformation in AI image processing...

Continue Reading

Intel’s Loihi 2 – Next-Generation Neuromorphic Research Chip

The shift from offline training using labeled datasets in parallel computing to learn on-the-fly through neuron firing rules in Neuromorphic Computing has significantly aided the ever-increasing demand for artificial intelligence in a range of applications. Intel’s first-generation...

Continue Reading

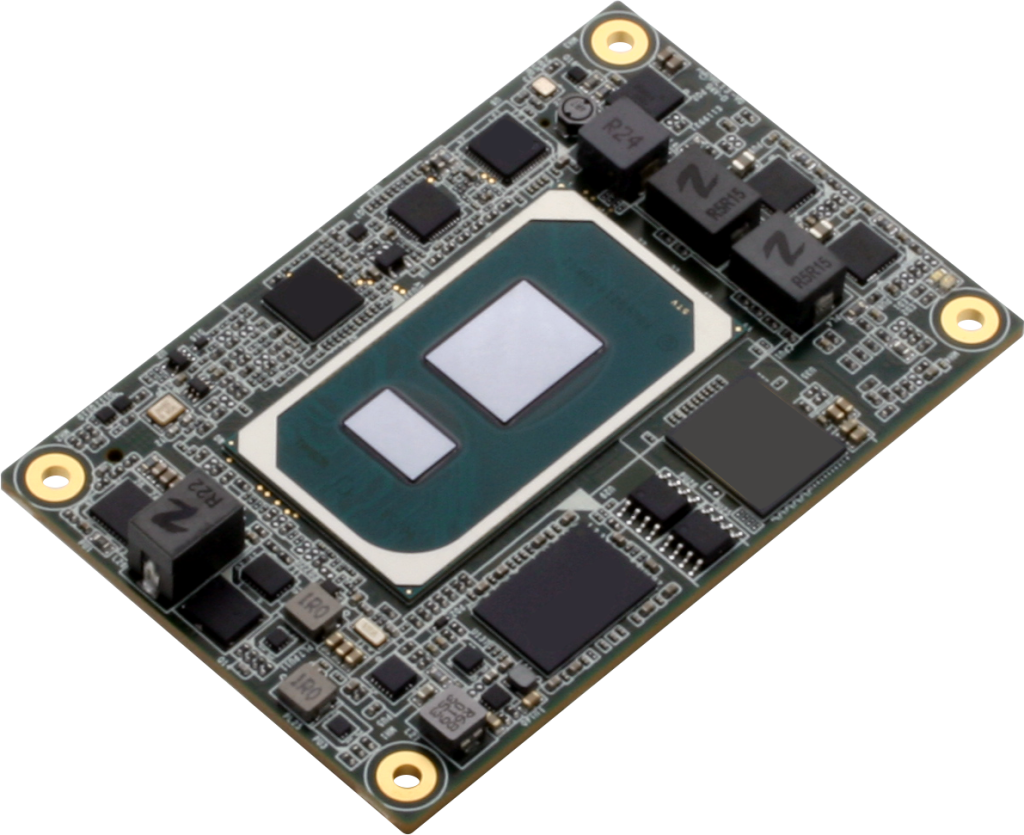

AAEON Launches NanoCOM-TGU, a COM Express Type 10 Powered by the 11th Generation Intel® Core™ Processor Family

To optimize embedded and mobile applications for today’s IoT requirements, AAEON, a leading manufacturer of advanced hardware platforms for SDN, NFV, SD-WAN and white box uCPE solutions, today announced the launch of NanoCOM-TGU, a COM Express Type 10 Powered by the 11th Generation...

Continue Reading

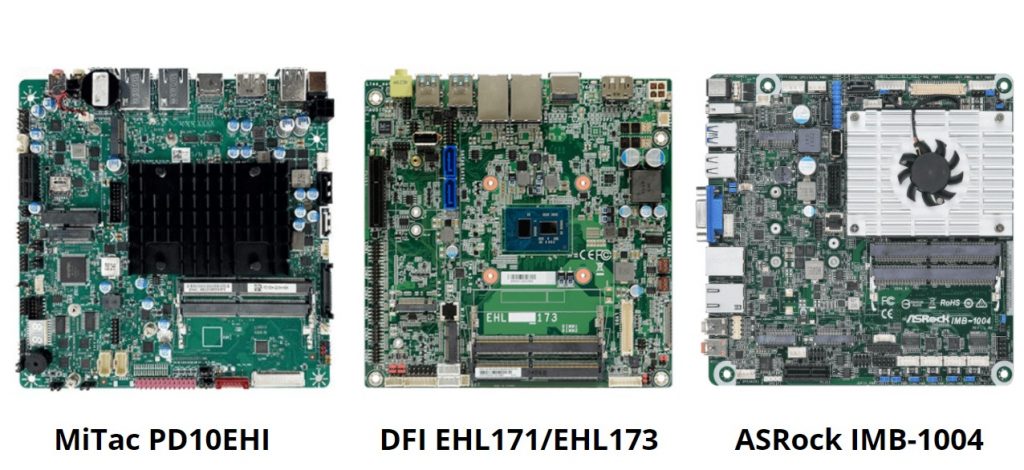

Elkhart Lake Processors Power Thin Mini-ITX motherboards for IoT applications

To support the next generation of IoT edge devices, Intel has developed a new line of processors enhanced for IoT. In comparison to 14nm generation processors, the new Elkhart Lake processor offers up to 1.7x better single thread, 1.5x better multi-thread, and 2x better graphic...

Continue Reading