“TinyML is proof that good things come in small packages”, or so does ARM describe it, as it promises with TinyML to change a different approach, by running optimized machine learning models on small and efficient microcontroller-based endpoint devices, instead of bulky, power-hungry computers located in the cloud. Supported by ARM and the industry-leaders Google, Qualcomm, and others, it has the potential to change the way we deal with the data gathered by the IoT devices, which already have taken over in almost every industry we can imagine.

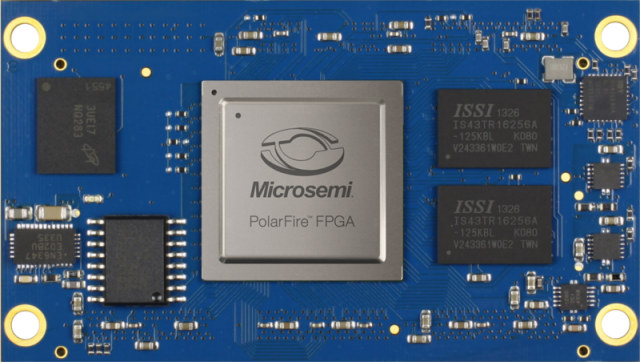

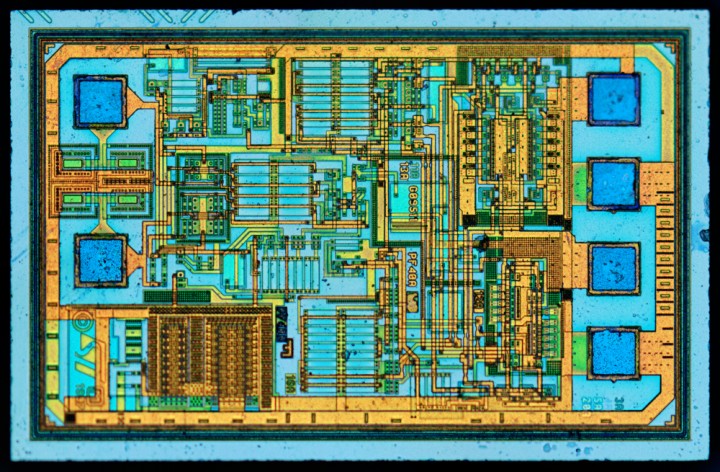

But why would we use TinyML in microcontrollers? Well, that is simple. They are everywhere! Families such as the ARM Cortex-M are very efficient and reliable, guaranteeing decent computing performance, when we consider their size, fitting them anywhere and being able to leave them there and just forget about it. Moreover, they are really cheap. Machine learning on microcontrollers enables us to take care of the data created on our IoT devices directly and perform more sophisticated and refined operations. But it does not stop there. Giving these capabilities to the microcontroller allows for more independent endpoint devices, that do not require an internet connection to trade data back and forth with the cloud, which leads to reduced latency, less energy consumption and extra security, since the data is not leaving the microcontroller as often, leaving it less exposed to attacks.

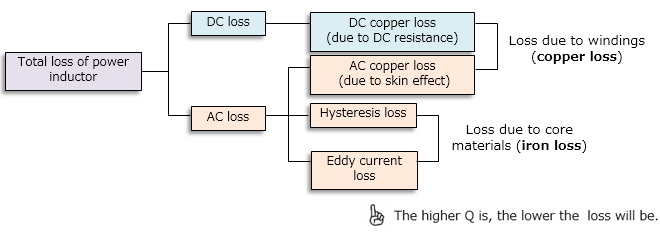

In order to bring the ML algorithms to the small boards, the complexity of the mathematical operations involved needed to be reduced. Data scientists accomplished this by applying different techniques, such as replacing floating-point operations with simpler 8-bit operations. The changes resulted in models adapted to the platform, targeting lower memory resources and processing, making them work more efficiently without compromising too much accuracy. Of course, they cannot replace completely the cloud models, but its suits for many use cases. Besides that, the hardware is being designed by ARM to accelerate the inference, which will uplift the already impressive performance we are getting.

Machine learning on microcontrollers gives new powerful capabilities to our projects, having almost unlimited use cases. Developers are already making use of TinyML to solve issues of all sorts, including responsive traffic lights to reduce congestion, prediction of maintenance on industrial machinery, detection of dangerous insects in crop fields, in-store shelves that warn when the stock is low, private healthcare monitors… The list goes on.

The value of TinyML has been quickly recognized by the main industry players, who are helping to push the technology even further, from which we can highlight the collaboration between Google and ARM combining the ARM CMSIS-NN libraries with the TensorFlow Lite Microframework, helping non-experts in embedded programming get their hands on the technology. People like you and me, developers, can also join easily, all you need is a computer, a USB cable and a development board that can be acquired for as low as $15.

TinyML Website: https://www.tinyml.org/home/