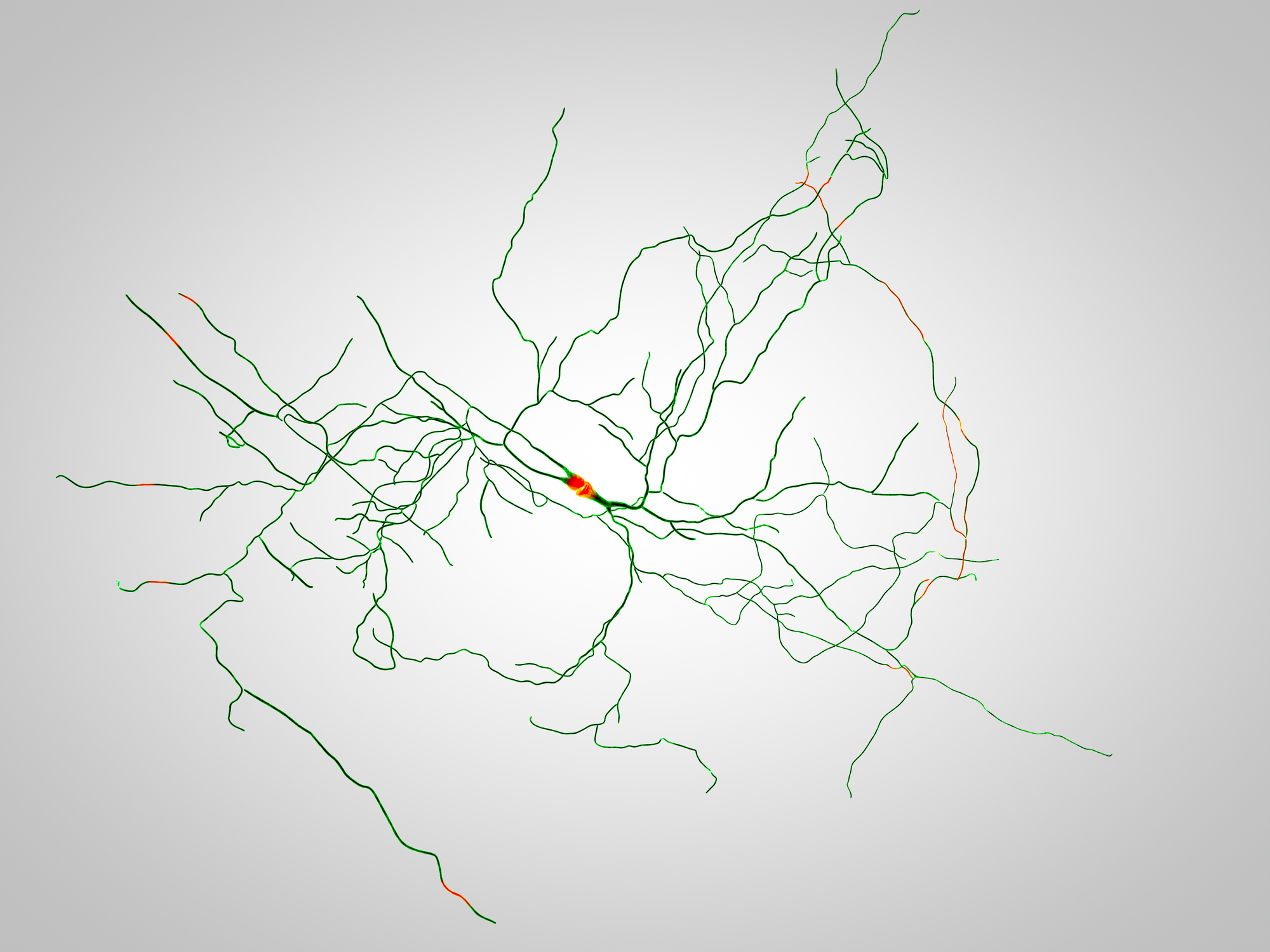

The conventional style of using network connectivity in bringing artificial intelligence models to improve performance and efficiency needs some modification to meet the demands from the embedded systems to the automobile industry. Before directly jumping to the role of AI inference at the edge, let us understand the difference between training and inference. Machine learning training refers to the process of building an algorithm with frameworks and datasets, while in the case of inference, it takes the trained machine learning algorithms to make a prediction.

By getting AI inference at the edge, there is a significant improvement in the performance along with the reduced time (inference time) and reducing the dependency on the network connectivity.

Machine learning or artificial intelligence inference can run in on the cloud as well as on a device (hardware). However, when there is a requirement for fast data processing and predictions of the outcome, AI inference at the cloud can increase the inference time creating delays in the system. For non-time critical applications, AI inference at the cloud can always do the job, but in a world full of IoT devices and applications that require fast processing, AI inference at the edge solves the problem. In AI inference at the edge, specialized models are made to run at the point of data capture, which is an electronic embedded device in this case.

A look at the famous AI inference at edge hardware

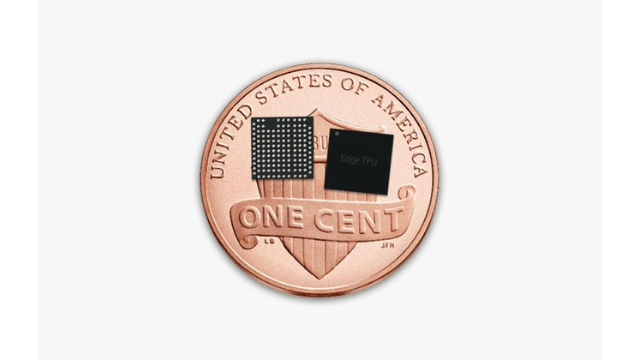

Google Edge TPU

Google Edge TPU is Google’s custom-built ASIC that is designed to run AI at the edge with a target for a specific kind of application. When we talk about TPUs, CPUs and GPUs, it is important to note that only TPU is an ASIC while the other two are not. Also, in TPUs, the ALUs are directly connected to each other without using memory. This means that there is a low latency in transferring information.

With the need and increasing requirements to deploy high-quality AI inference at the edge, there have been several prototyping and production products from Coral that come with integrated Google Edge TPU. This small ASIC is built for low-power devices that can execute state-of-the-art mobile vision models such as MobileNet V2 at almost 400 FPS, in a power-efficient manner. According to the manufacturer, an individual Edge TPU can perform 4 trillion operations per second (4 TOPS), while utilizing only 2 watts of power. More information on ASIC and the production products can be found on the manufacturer’s page.

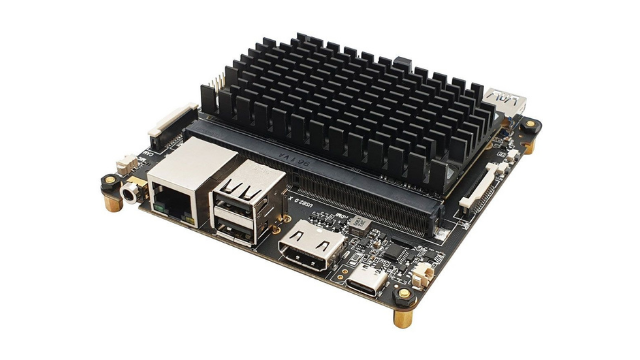

Rock Pi N10

If you are interested in deploying AI or deep learning at the edge and looking for a single-board computer. This Rock Pi N10 can serve the purpose through the powerful Rockchip’s RK3399Pro SoC integrated by CPU, NPU, and GPU. The CPU features Dual Cortex-A72 running at the frequency of 1.8GHz and quad Cortex-A53 clocked up to frequency 1.4GHz. NPU has the power of computing up to 3.0 TOPS, especially for AI and deep learning processing. NPU is assumed to have decent performance for complex calculation and processing that can be deployed for AI and deep learning applications.

Once you buy this SBC, here is a detailed guide on how to get the Rock Pi N10 up and running with the NPU inference. The hardware has software support for Debian and Android 8.1 OS. You can also expand the storage using the M.2 SSD connector that supports up to 2TB SSD. However, before you get the hardware for your AI inference at the edge, please note that the SBC does not come with onboard Wi-Fi support, but you can get an optional Wi-Fi module to be embedded on the board. Find more details on the Seeed Studio product page.

NVIDIA Jetson Nano Developer Kit

One of the most powerful hardware designed for AI applications is the NVIDIA Jetson Nano Developer Kit that features a small, powerful computer that lets you run multiple neural networks in parallel with applications like image classification, object detection, and speech processing. The hardware also gets support for AWS ML IoT services, with this you can easily develop deep learning models and deploy them on the edge. The manufacturer has also provided deep learning inference benchmarks that will help you decide if the hardware is best suited for your application and requirements.

With the developer kit, the user can run various ML frameworks, including TensorFlow, PyTorch, Caffe/Caffe2, Keras, and MXNet. NVIDIA Jetson Nano developer kit comes with the Quad-core ARM A57 that runs at the clock frequency of 1.43 GHz and a 128-core NVIDIA Maxwell GPU. This model is one of the leading hardware for deploying AI inference at the edge. For more information on the hardware, head to the official product page.

Future of AI inference at the edge

The undisputed fact is that AI computing at the edge brings processing and data storage closer to the source. The future of Edge AI computing lies in an autonomous vehicle system where edge AI hardware takes data from the surroundings, processes it, and makes the decision there itself. This is a major advantage of AI inference at the edge over cloud processing where it can take longer processing time. Overall, the future of AI inference at the edge is bright with the growing AI requirements and fast processing of data using deep learning models.

cover photo: depositphotos.com