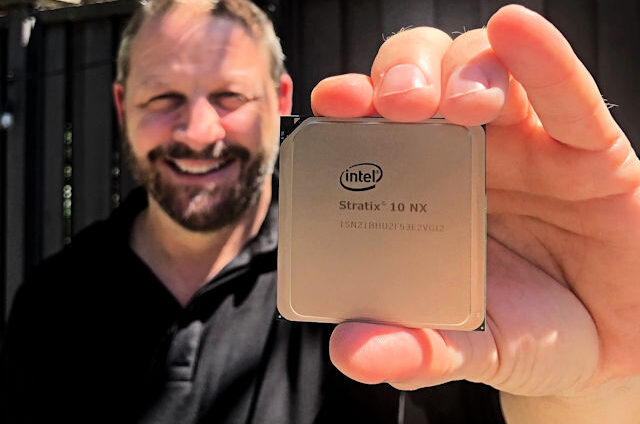

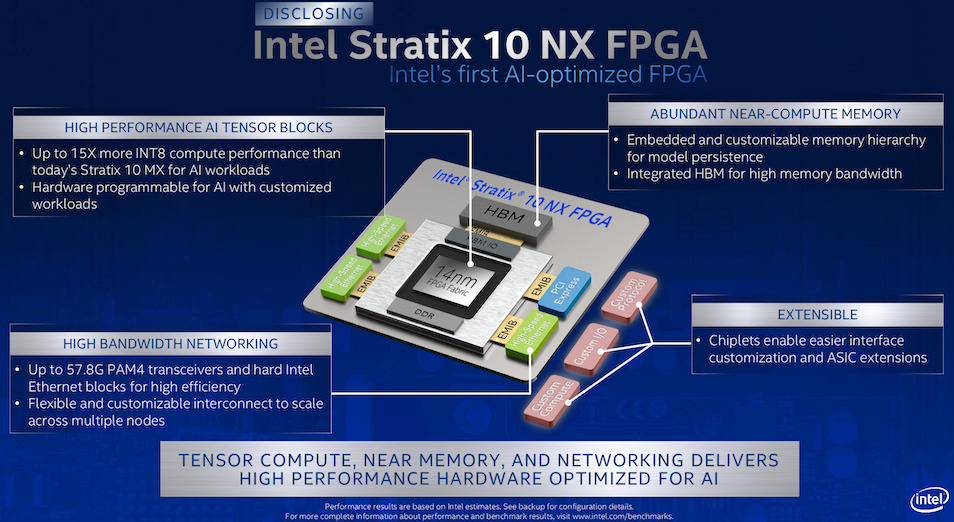

As the immense value proposition of Edge Computing becomes more obvious, Chip manufacturers are working twice as hard to be the one that develops the SOC, FPGA, or MPU that provides designers with all that is needed to develop powerful Edge AI-based solutions. While a lot of progress has been made with MPUs and SOCs via impressive boards like the Google’s Coral and the intel compute stick, there are very few FPGA solutions out there with the kind of specifications required for Edge-AI. Taking a stab at this, Intel recently announced the launch of a new, AI-Optimized FPGA called the Stratix 10 NX.

The Stratix 10 NX is an AI-optimized FPGA, designed for high-bandwidth, low-latency AI acceleration applications. It delivers accelerated AI compute solution through AI-optimized compute blocks with up to 15X more throughput compared to the standard Intel Stratix 10 FPGA DSP Block. It provides an in package 3D stacked HBM high-bandwidth DRAM and up to 57.8G PAM4 transceivers.

The Stratix 10 NX comes with a unique combination of capabilities that provides designers with all that is needed to develop customized hardware with integrated high-performance AI. At the center of these capabilities is a new type of AI-optimized block called the AI Tensor Block, which is tuned for the common matrix-matrix or vector-matrix multiplications used in AI computations, with capabilities designed to work efficiently for both small and large matrix sizes. According to Intel’s spec sheet, a single AI Tensor Block achieves up to 15X more INT8 throughput compared to the standard Intel Stratix 10 FPGA DSP Block.

Asides, the AI Tensor block, the device also implements abundant Near-Compute memory stacks that allow for large, persistent AI models to be stored on-chip, reducing latency with large memory bandwidth, to prevent memory-related performance challenges usually experienced with large models.

To ensure a scalable and flexible I/O connectivity bandwidth and eliminate its limiting effect in multi-node AI inference designs, the device features up to 57.8 Gbps PAM4 transceivers, along with hard IP such as PCIe Gen3 x16 and 10/25/100G Ethernet MAC/PCS/FEC. The flexibility and scalability provided these connectivity solutions make it easy to adapt the device to market requirements.

A highlight of these 3 key features which sets the Stratix 10 NX FPGA apart is provided below;

- High-Performance AI Tensor Blocks

- – Up to 15X more INT8 throughput than Intel Stratix 10 FPGA digital signalprocessing (DSP) block for AI workloads1

- – Hardware programmable for AI with customized workloads

- Abundant Near-Compute Memory

- Embedded memory hierarchy for model persistence

- Integrated high- bandwidth memory (HBM)

- High-Bandwidth Networking

- Up to 57.8 G PAM4 transceivers and hard Ethernet blocks for high efficiency

- Flexible and customizable interconnect to scale across multiple nodes

No price information is available at the moment but more information on the features and applications of the new FPGA can be found on the product’s page on intel’s website.

![Making Your First Printed Circuit Board – Getting Started With PCBWAY [PART 1]](https://www.electronics-lab.com/wp-content/uploads/2018/05/pcb-boards.jpg)